The Urgency of Rethinking U.S. Nuclear Energy Regulation

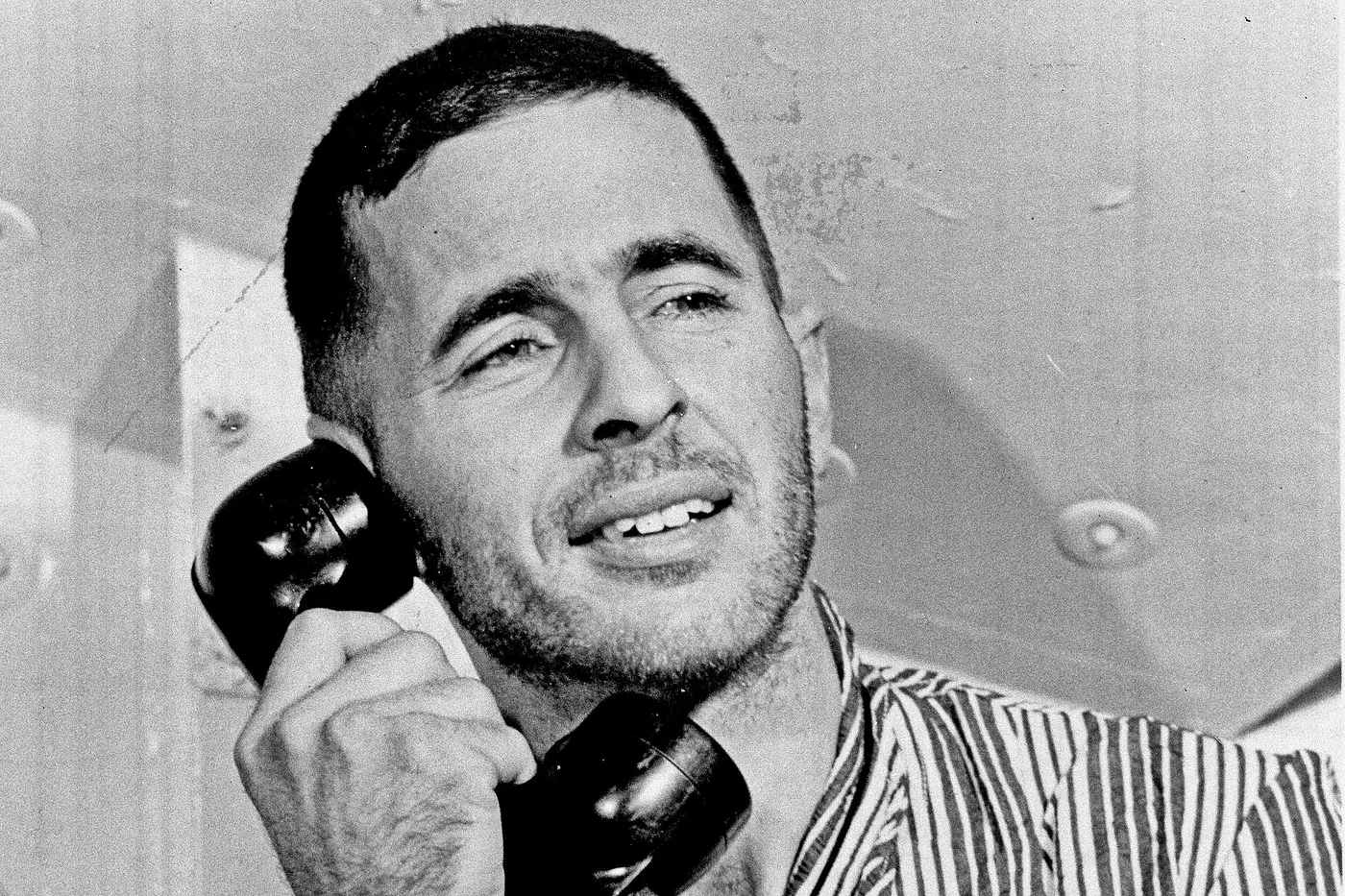

William A. Anders, first chairman of the Nuclear Regulatory Commission, 1975–76.

Executive Summary

It is widely perceived that Americans have two options to reduce the impact of our electricity consumption on the climate: (1) reduce our consumption of electricity; (2) transition from fossil fuels to non-dispatchable energy sources like wind and solar. The problem is that neither of these options is realistic.

The U.S. Energy Information Administration projects that, over the next three decades, U.S. electricity needs will increase by 913 billion kilowatt-hours: 24 percent above 2022 levels. And wind and solar energy are not reliable enough to meet that increased demand, let alone to transition us from existing fossil fuel-powered electricity.

Americans will pay a heavy price if we fail to meet existing and future electricity demand. Household energy prices will increase, as will the frequency of blackouts. Economically important industries may leave the U.S. for locations where electricity is more reliable. These problems will fall disproportionately on lower-income Americans, because energy-driven inflation will represent a greater share of their incomes, and because energy-driven employment represents a greater share of their job opportunities.

The one way out of this dilemma is nuclear energy. Nuclear power is the only low-carbon source of electricity that has the density and scale to meet American electricity demand. Unfortunately, the U.S. Nuclear Regulatory Commission—established in 1974—has effectively shut down the growth of nuclear power in the United States.

Some people are afraid that nuclear energy is unsafe, and this perception of the risks of nuclear power has dominated the NRC’s regulatory posture. In fact, nuclear energy is very safe, as we explain in this paper, and far more reliable than alternative low-carbon energy sources. France safely and successfully generates 70 percent of its electricity from nuclear power. Ontario generates 58 percent. The U.S. generates 19 percent. If the U.S. had taken the path of France and Ontario from 1979 to 2020, its carbon emissions from coal produced electricity would have declined by 45 percent. This would have prevented 24 gigatons of carbon dioxide emissions, all else being equal.

It is essential to the future prosperity of the U.S. that Congress incentivize the NRC to facilitate a significant increase in American nuclear electricity generation. Our key idea: link increases in the NRC’s budget—or that of a new “Nuclear Innovation Agency”—to increases in nuclear energy capacity. In this way, the NRC or its successor would have a powerful economic incentive to enable safe, functional nuclear infrastructure. Other ideas involve better aligning the NRC’s regulatory science with the empirical evidence regarding the safety of nuclear power.

Introduction

Abundant, reliable, and affordable energy is essential to American prosperity. Significant increases in energy prices have a particularly harmful impact on the budgets of poorer Americans. Families depend on energy to heat their homes, keep the lights on, and transport them to work. The price of the food they buy is dependent on the price of fuel. Blue-collar jobs gravitate toward and depend on low-cost energy. The recent spate of consumer price inflation is, in large part, due to sharply rising energy prices.

In addition, our energy policy must account for the environmental and climate impact of fossil fuels. According to the regular assessments of the United Nations’ Intergovernmental Panel on Climate Change, most geophysicists and many policymakers are concerned that the greenhouse gases emitted by fossil fuels — principally petroleum, natural gas, and coal — will lead to significant damage to ecosystems and the well-being of humans throughout the world.

Congress has taken significant action to reduce the United States’ dependence on fossil fuels and the emission of greenhouse gases. Elected officials from both parties have called for increased electrification and a shift to electric vehicles. The Biden White House set a goal “to create a carbon pollution-free power sector by 2035 and net zero emissions economy by no later than 2050.” Vastly increasing new low-carbon power generation will be critical for the U.S. to meet this significant future demand for electricity while displacing fossil fuels.

California, with immense public investment in renewables and by far the largest GDP of any state, is at present dependent on fossil fuels, and will be for decades to come.

The only realistic way to protect the living standards and economic opportunities of lower-income Americans, while making progress toward reducing greenhouse gas emissions, is to expand nuclear energy production. In 2021, fossil fuels represented 77 percent of U.S. energy consumption, compared to eight percent for nuclear energy and five percent for wind and solar. Nuclear energy is the only low-carbon energy source capable of meeting the minimum demands of the electricity grid; i.e., of providing reliable base load energy at scale.

Nuclear power is the most consistent source of low-carbon electricity. Unlike wind and solar power, its output is not dependent on the weather. Nuclear’s base load power is critical for maintaining grid reliability, which is essential for keeping electricity prices affordable and displacing fossil fuels. In order to replace the electricity generated from coal and natural gas in 2021, the U.S. would need to produce and transmit an additional 2,474 terawatt-hours of power every year. Our present quality of life in the U.S. requires an immense amount of electricity and demand for electricity will increase throughout the clean energy transition.

While beyond the scope of this paper, it is important to recognize that replacing the energy generated by coal and natural gas will be detrimental to numerous Americans and their respective families and communities. The transition away from coal in recent years has not been navigated in a way which has recognized the dignity of coal workers and their contributions to our access to affordable, reliable electricity and broader prosperity. This aspect of our clean energy transition must be thoughtfully considered, particularly as legislators impose new rules and regulations that will advantage other forms of energy — nuclear, wind, and solar power.

Renewables alone are not enough to meet U.S. energy needs

A grid powered solely by wind turbines, solar panels, and batteries will be neither reliable nor affordable. California, with immense public investment in renewables and by far the largest GDP of any state, is at present dependent on fossil fuels and, without significant policy changes, will be for decades to come. The costs of implementing a grid based solely on renewable energy and battery storage rise nonlinearly as a greater portion of the energy mix comes from non-dispatchable sources, like wind and solar.

A report by the Clean Air Task Force estimated that California would need 9.6 terawatt-hours of energy storage to maintain grid reliability with 80 percent of power coming from renewables. However, to maintain grid reliability with 100 percent of power coming from renewables would require 36.3 terawatt-hours of energy storage. Building batteries at this scale is impractical and the land use requirements to generate and transmit this power would be undesirable.

Even in communities which overwhelmingly believe we need to invest in wind and solar power, these projects are running into opposition. Moreover, a severe weather event like winter storm Uri in Texas in 2021 or a severe forest fire could severely impede electricity production for days because battery storage estimates cannot account for them. It would be negligent for any government to pursue an energy policy that would leave their grid vulnerable to catastrophic failure.

Although California leads the country in utility-scale battery storage, it would need to multiply its storage capacity by a factor of 10,000 to achieve 100 percent renewable energy reliance. Even if this venture could succeed in California, it would not be a model for the rest of the world to follow. Additionally, if our goal is to accelerate the reduction of global carbon emissions, the batteries necessary to take California from 80 percent to 100 percent renewables would be better off being deployed to enable more renewable energy in a locality with a more carbon intensive energy mix.

Although California leads the country in renewables, it would need to multiply its storage capacity by a factor of 10,000 to achieve 100 percent renewable energy reliance.

Bringing the U.S. nuclear industry into the 21st century

Renewable energy, batteries, and nuclear power will all be necessary to meet our climate goals while providing Americans with clean, affordable, and reliable energy. The present nuclear power regulatory regime, however, does not reflect the United States’ commitment to our sustainability goals, the necessity of nuclear power to reach those goals, or the risks presented by nuclear power plants relative to other sources of dispatchable power.

The U.S. needs to reform its nuclear power regulations to complete a successful clean energy transition. Congress has demonstrated its interest in advanced nuclear technologies by allocating $1.23 billion for the Advanced Reactor Demonstration Program (ADRP) in the Infrastructure Investment and Jobs Act. In the same bill, Congress affirmed the importance of our existing nuclear power plants by allocating six billion dollars to the Civil Nuclear Credit Program, which temporarily subsidizes unprofitable reactors through 2026. But these bills are incremental in nature, relative to the importance of expanding nuclear energy output.

Ontario and France generate 58 percent and 70 percent, respectively, of their electricity from nuclear power. Nuclear power is not inherently expensive, but has been rendered less competitive by a combination of bad science and counterproductive policy.

Empirically, two of the most successful transitions away from high carbon emissions energy sources were achieved by Ontario, Canada and France. Ontario and France generate 58 percent and 70 percent, respectively, of their electricity from nuclear power. Their aggressive buildout of nuclear power plants enabled them to retire their coal plants and reduce emissions, while delivering affordable and reliable energy to their citizens. Nuclear power is not inherently expensive, but has been rendered less competitive by a combination of bad science and counterproductive policy.

Through a series of regulatory changes, the U.S. can learn from Ontario and France, enabling its nuclear sector to deliver more reliable and affordable energy for Americans. These policy changes can not only help Americans, but also people in other countries, by reducing net global carbon emissions.

The stagnation of the U.S. nuclear industry

The Atomic Energy Act of 1946 created the Atomic Energy Commission (AEC), the precursor to the Nuclear Regulatory Commission (NRC). Congress decided to bring the development of nuclear weapons and power under the control of civilian leaders instead of the military. This act was amended by the Atomic Energy Act of 1954 to establish a civilian nuclear industry. To further incentivize civilian nuclear power, Congress passed the Price-Anderson Nuclear Industries Indemnity Act in 1957, which introduced processes and limited liability for producers in the event of a meltdown.

The United States’ nuclear power industry was wildly successful in the early years under the AEC. Nuclear energy was capable of competing with more established fuels like coal. From 1970 to 1974, Commonwealth Edison of Chicago built six nuclear generating units for $147-248 per kilowatt (kW). Coal units built by ComEd between 1965 and 1975 cost $113-218 per kW.

American utilities ordered 45,000 MW of nuclear capacity by 1967, only nine years after the first commercial power plant had begun operation. From 1950 to 1974, utilities ordered 248 nuclear power plants. By 1960, American nuclear power plants generated one terawatt hour of electricity per year. At the end of 1974, American nuclear power plants generated 144 terawatt hours of electricity per year.

In the 1970s, however, nuclear regulators began making regulatory changes that initiated the stagnation of nuclear power in the U.S. The AEC released a series of “Regulatory Guides” which would eventually become de facto regulations under the NRC, because utilities judged that proposing alternatives to the NRC’s approach were worth neither the cost nor the business risk. The nuclear power industry was strongly profitable and was not worried about regulatory ratchet. However, the regulatory codes and standards quickly ballooned, according to Vaclav Smil:

As of January 1, 1971, the United States had some hundred codes and standards applicable to nuclear plant design and construction; by 1975, the number had surpassed 1,600; and by 1978, 1.3 new regulatory or statutory requirements, on average, were being imposed on the nuclear industry every working day.

The rising regulatory burdens on nuclear power plants gained a new significance with the 1979 energy crisis, stagflation, and a drop in energy demand. Nuclear’s competitors, particularly coal plants, worked to cut their costs and consolidated in order to resume profitability. However, the nuclear industry was less responsive to these changes in market conditions. The time to build a new nuclear generating unit nearly tripled from the early 1970s to the early 1980s. Utilities canceled their orders for over 100 plants from 1970 to 1984.

ALARA: An extremist approach to radioactive emissions

In 1971, the AEC adopted a new policy which required all nuclear plants to reduce radioactive emissions to an amount as low as reasonably achievable (ALARA). Both regulators and commercial nuclear power plants are and have always been committed to safety. In the U.S., zero civilian nuclear workers have died due to exposure to radioactive materials. There have been a total of 10 deaths associated with civilian nuclear power in the U.S. throughout the industry’s entire history.

Costly and unnecessary requirements have hindered the growth of the U.S. nuclear industry and its global reach. Operating under the philosophy of ALARA, the NRC has mitigated risks from ionizing radiation at significant cost to the health and prosperity of the American people. Without these excessive regulations, more people would benefit from nuclear energy, which in turn means that fewer Americans would have prematurely died from air pollution from coal, and suffered from a less affordable energy sector. Furthermore, these regulations have resulted in significant, unnecessary carbon emissions which increase the risks posed by climate change. The aggregate costs incurred due to the policy of ALARA significantly outweigh the marginal benefits of a further reduction in the risk of an exposure to ionizing radiation.

As discussed below, the Linear No Threshold (LNT) model makes several assumptions about the risks presented by low-dose radiation exposure which do not reflect analyses of populations exposed to radiation or the practices of the world’s leading radiologists. Under LNT, the risks of low-dose exposure are exacerbated beyond empirical evidence.

The decision to adopt a combination of ALARA and the LNT model has reduced the competitiveness of the U.S. nuclear power sector. Applying ALARA assumptions to the LNT model of radiation justifies minimizing exposure beyond harmful levels. In the context of market competition between nuclear plants and coal plants, ALARA puts undue demands on producers to mitigate the risks of exposure to low-dose radiation. ‘As Reasonably Achievable’ means that any profitable nuclear plant may be expected to allocate profits towards excessive risk mitigation. This deters entrepreneurs and would-be investors from seeking to innovate and compete. We do not need the ALARA regulatory standard in order to protect American civilians from exposure to levels of radiation that have been empirically demonstrated to be harmful.

The establishment of the Nuclear Regulatory Commission

In 1974, President Ford signed the Energy Reorganization Act into law, which established the NRC, an independent commission that oversees nuclear energy, medicine, and related safety and security issues. The purpose of the law was to separate out governance of military and civilian uses of nuclear energy.

Since the establishment of the NRC, no new reactors have been both approved and built. Only reactors approved before 1974 have come online since then. When the NRC approved Georgia’s Vogtle Units 3 and 4 in February 2012, they were the first new reactors to receive even a vote for approval of a construction license since 1978. The reactors approved by the NRC have been canceled or delayed, and failed to begin operations. Vogtle 3 and 4 are finally expected to begin operations in 2023.

The desire to mitigate the risks posed by radiation from nuclear reactors and waste is understandable. The U.S. has regulations that mitigate potential harm to workers and other civilians from ionizing radiation. But the goal of regulation should be to create a framework that provides the greatest net benefit by balancing the benefits of carbon-free energy with the low risks of harm from radiation. Eliminating the risk of any low-dose exposures to radiation does not strike that balance. The narrow focus on mitigating harms from radiation at the exclusion of all other considerations has resulted in greater poverty, air pollution, grid unreliability, and unnecessary carbon emissions. Put another way: the risk of a shortened lifespan due to air pollution from coal plants is significantly greater than the risk of shortened lifespan due to nuclear radiation. We can improve the well-being and lifespans of Americans by adopting a regulatory framework that more accurately reflects the comparative harms presented by low-dose ionizing radiation, pollution, poverty, and carbon emissions.

The basics of ionizing radiation

The fundamental science behind nuclear power is critical to assessing the threats posed by the emission of radioactive materials. As the NRC puts it on their website, “We live in a radioactive world, and radiation has always been all around us as a part of our natural environment.” Humans are exposed to radioactivity from the sun, air travel, bananas, medical procedures, and, yes, potentially from nuclear plant meltdowns or improper nuclear waste storage. Despite radiation being a critical part of the human experience, there is a tendency to rely on depictions in movies and TV shows, rather than the expertise of America’s world-class radiologists, nuclear engineers, and epidemiologists. Our regulatory policy is more calibrated to the fear exacerbated by Hollywood than the empirical studies conducted by our nation’s leading institutions.

Matter is composed of elements: hydrogen, oxygen, gold, and the like. Each element has a different number of protons and every atom of an element will have the same number of protons. However, individual atoms of a given element can contain varying numbers of neutrons and electrons. An atom with a different ratio of protons to electrons is an ion. An atom with a different number of neutrons is an isotope.

A few isotopes, like uranium-235, can undergo fission and split into two lighter isotopes when hit with a neutron. (The number “235” in uranium-235 signifies the total number of protons and neutrons in the uranium isotope’s nucleus. All uranium atoms have 92 protons; hence, uranium-235 isotopes have 92 protons and 143 neutrons.) An immense amount of energy is released when the uranium-235 molecule splits. Nuclear fission reactors work by sustaining a chain reaction of this fission process and channeling the released energy to generate electricity. The precise details vary depending on the design of the reactor, but the following is a simplified explanation of the fission process.

The two lighter isotopes created through fission are called fission products. Some of the products are isotopes and are inherently unstable, meaning they will decay into another isotope. The half-life of an isotope is the time it takes for half of the isotope to decay into something else. When the isotopes decay, they release energy in the forms of alpha particles, electrons, or high energy photons. These lighter isotopes are part of the waste generated by nuclear power plants.

The human body is composed of trillions of cells and those cells are mostly composed of water. If one of the particles released by the decay of isotopes can enter a cell, it can damage the chemical bonds of the water molecule. Particles capable of causing this damage are called ionizing radiation. If a person is exposed to a high dose of ionizing radiation over a short period of time, they may suffer and die from Acute Radiation Syndrome, like many of the first responders at Chernobyl. At lower but still significant levels, exposure to ionizing radiation could likewise result in increased risk of cancer and other health problems.

However, if a person is exposed to insignificantly low doses of ionizing radiation, as we all are every day, the body has mechanisms to repair damage. Humans expose themselves to higher levels of ionizing radiation by living at higher elevations, in Colorado or Finland, flying on airplanes, and living within 50 miles of a coal power plant. The dose of the exposure matters in both magnitude and time period.

To quantify low doses of radiation, the standard international unit of measure is the sievert (although the NRC uses rem, or 0.01 sievert). Every 1,000 miles of air travel exposes a person to one millisievert (mSv) of ionizing radiation. Living in the Colorado plateau area around Denver will, on average, expose a person to 0.9 millisieverts of background radiation per year. On the other hand, living at sea level, like on the Gulf or Pacific Coasts, will expose someone to roughly 0.23 millisieverts of background radiation per year. Controlling for other variables, these differences in background radiation do not result in different rates of cancer.

Given this background, how should we define the level of radiation exposure that is dangerous to humans? In an effort to do this, regulators adopted a model called Linear No Threshold. Unfortunately, this model has significant limitations.

Regulators measure radiation risks using an unscientific method

The Linear No Threshold model is a dose-response model used to estimate the negative health consequences of exposure to ionizing radiation. The LNT extrapolates the damages from high-doses of radiation, known to empirically result in harm, in a linear model down to extremely low-doses of radiation. It assumes that exposure to any level of ionizing radiation leads to a marginal increase in the probability that a human will suffer from radiation-induced cancer or other health issues. The model overstates the risks posed by exposure to low-doses of ionizing radiation. The NRC and the Environmental Protection Agency (EPA) endorse the LNT, while the United Nations Scientific Committee on the Effects of Atomic Radiation (UNSCEAR) no longer uses the LNT when analyzing the harms caused by low-dose exposures to ionizing radiation.

Collectively, there exists a vast array of facts and general knowledge about ionizing radiation effects on animal and man. It cannot be disputed that the depth and extent of this knowledge is unmatched by that for most of the myriads of other toxic agents known to man. No one has been identifiably injured by radiation while working within the first numerical standards first set by the NCRP [National Council on Radiation Protection and Measurements] and then the ICRP [International Commission on Radiological Protection] in 1934. The LNT is a deeply immoral use of our scientific heritage. — Lauriston Taylor, founder and past president of the National Council on Radiation Protection and Measurements, 1980

In 1934, the International Commission on Radiological Protection’s (ICRP) recommendations claimed that a normal healthy person could tolerate exposure to X-rays at the equivalent of an annual dose of 500 mSv. For a comparison, the average accumulated exposure for all shipyard personnel in the nuclear navy is 8.7 mSv. The dose limits introduced by the ICRP in 1934 did result in a decline of excess skin cancer and leukemia cases among radiologists and radiological technicians. In 1954, the ICRP further reduced the annual dose guideline to 150 mSv, out of concern that exposure to ionizing radiation may result in higher cancer rates in the offspring of those exposed. Further analyses of the children of the survivors of the atomic bomb strikes on Hiroshima and Nagasaki, have found this concern to be nonempirical. Many of these survivors were exposed to between 500–13,000 mSv within a single day. To put that in perspective, the maximum dose rate at the Fukushima Daiichi plant during the meltdown was between 1–10 mSv per hour.

The Linear No Threshold model does not accurately reflect the risks of low-dose ionizing radiation. There are other potential models which more accurately reflect the empirical data of the harms caused by low-dose ionizing radiation. The U.S. Naval Nuclear Propulsion Program released a report in May 2021, “Occupational Radiation Exposure from U.S. Naval Nuclear Plants and their Support Facilities,” referencing studies that demonstrate this point. The report itself is diplomatic, and often reaffirms the status quo, but it also includes this important point (emphasis added):

A very effective way to cause undue concern about low-level radiation exposure is to claim that no one knows what the effects are on human beings. Critics have repeated this so often that it has almost become an article of faith. They can make this statement because, as discussed above, human studies of low-level radiation exposure cannot be conclusive as to whether or not an effect exists in the exposed groups, because of the extremely low incidence of an effect. Therefore, assumptions are needed regarding extrapolation from high-dose groups. The reason low-dose studies cannot be conclusive is that the risk, if it exists at these low levels, is too small to be seen in the presence of all the other risks of life…Instead of proclaiming how little is known about low-level radiation, it is more appropriate to emphasize how much is known about the small actual effects.

According to a report by the National Academy of Sciences, “Studies of populations exposed to low-dose radiation, such as those residing in regions of elevated natural background radiation, have not shown consistent or conclusive evidence of an associated increase in the risk of cancer.” Similarly, in 1990, the National Cancer Institute completed a study of cancer in U.S. populations living near nuclear power plants and concluded, “there was no evidence that leukemia or any other form of cancer was generally higher in the counties near the nuclear facilities than in the counties remote from nuclear facilities.” Both of these studies are from the 1990s, and there has not been a study that directly contradicts their findings. There are more studies cited in that document conducted by researchers at Johns Hopkins University, New York University, and numerous government public health agencies.

The application of the Linear No Threshold model encourages public health responses which are scientifically unjustified, and harmful to populations exposed to low doses of ionizing radiation.

The application of the Linear No Threshold model has significant policy implications. The model might encourage public health responses which are unjustified and harmful to populations exposed to low doses of ionizing radiation.

By inflating the risks of exposure to low-dose radiation, government officials may order evacuations in response to a nuclear reactor meltdown that may be more harmful than sheltering in place. Researchers argue this was the case during the Fukushima Daiichi accident. More than 1,000 people died during the evacuation, while there were zero deaths which resulted due to exposure to radiation.

The LNT in combination with ALARA imposes an undue burden on civilian nuclear power plants. In the context of the military, the costs of ALARA are not as stark because the military does not face the same market competition. However, energy markets are extremely competitive and the assumptions of the LNT require investments which impede the competitiveness of nuclear power.

Critics of the NRC’s model have proposed that the LNT should be replaced by a Nonlinear No Threshold model which better reflects the empirical data. Identifying a specific threshold presents an unnecessary challenge. Rather than specify a threshold, policy guidelines can require certain risk mitigation responses that vary based on the magnitude of an exposure or release of ionizing radiation. This type of informed decision-making is necessary, regardless of the specified model. American agencies and international organizations should adopt a model that better reflects contemporary research on the health risks posed by low-dose ionizing radiation.

Managing meltdowns

There have been three meltdowns which are responsible for most Americans’ fears and concerns around expanding nuclear power plants: Chernobyl, Fukushima Daiichi, and Three Mile Island.

Chernobyl. The Chernobyl accident was by far the largest nuclear plant catastrophe. During a planned safety test of one of the reactors, power dropped to zero and the operators were unable to restore it. The reaction became unstable and, instead of the reactor shutting down, a chain reaction began generating an immense amount of energy. The nuclear core melted down, causing explosions that destroyed the reactor’s building and started an open-air core fire. This released radioactive contamination into the air that spread for thousands of kilometers. The explosion killed two engineers and severely burned several others. Another 146 workers and first responders likely died from radiation exposure over the following decade, most of whom died within a month of the incident. Researchers attribute 16,000 deaths to the disaster. These numbers are contested, as they are estimates generated by statistics and varying models of the effects of low-doses of radiation. A researcher who uses the LNT will generate a larger number of statistical deaths. As documented in histories of the disaster, the Chernobyl nuclear power plant had serious design flaws and its operations were poorly managed in ways that no current or future plant would experience.

Fukushima Daiichi. The Fukushima Daiichi accident was a tragedy and further impeded the resurgence of civilian nuclear power. In March 2011, a 9.0 magnitude earthquake and its resulting massive tsunami devastated coastal Japan. In response to the earthquake tremors, all eleven reactors at the nuclear plants in the affected region shut down automatically as designed. However, the Daiichi plant lost power and when the tsunami flooded the plant, all but one of the backup generators was disabled. Without a consistent flow of power, the residual heat removal system ceased to function, causing three reactors to melt down. Hundreds of people worked diligently to restore power and restart the cooling systems. As they struggled to do this, a hydrogen build-up created by the meltdown triggered multiple explosions, causing significant damage to the plant and injuring several people at the site.

For five years following the accident, no deaths were attributed to the explosions or radiation resulting from the meltdown. However, in 2018, one plant worker’s family won a civil suit against Tokyo Electric Power Company, following his diagnosis with lung cancer and subsequent death. Between 1,383 and 2,202 people died during the evacuation from the surrounding area. Researchers attribute most of those deaths to fatigue and deprivation resulting from the earthquake and flooding.

The necessity of the evacuations that resulted due to concerns about radiation are highly disputed. The evacuations were extremely costly but the levels of radiation exposure for most of the affected population were comparable to background radiation. Several reports from the UNCEAR have concluded that there have been no discernible adverse health effects as a result of the radiation released by the meltdown. The meltdown at Fukushima Daiichi could have been avoided. The plant was not abiding by best practices. The other eight reactors at the nuclear plants in the affected area were able to avoid similar issues.

Three Mile Island. The most salient meltdown in contemporary American history is The Three Mile Island accident. The accident began when a non-nuclear section of the plant experienced a mechanical or electrical failure which prevented the feedwater pumps from sending water to the steam generators. In response to rising pressure in the system, the pilot-operated relief valve opened. However, it became stuck open and the coolant began to escape through the valve, resulting in a loss-of-coolant accident. Due to other design issues, the operators of the plant did not recognize that this valve was open and took a series of actions which resulted in the core overheating.

No one died from released radiation or the evacuation. The radioactive gas released was comparable to background levels to local residents. The Three Mile Island Public Health Fund commissioned independent researchers to investigate whether there were any changes in cancer rates resulting from the incident. The study concluded, “For accident emissions, the author failed to find definite effects of exposure on the cancer types and population subgroups thought to be most susceptible to radiation. No associations were seen for leukemia in adults or for childhood cancers as a group.” Despite this reality, the accident at Three Mile Island is often used to argue that the use of nuclear power is not justified due to the potential risk of a meltdown.

Learning the right lessons from nuclear accidents

Nuclear plant operators and designers globally have adopted improved designs and processes as they have learned from these accidents. Meltdowns pose real potential risks to workers and people in the surrounding areas; these risks can be mitigated through effective engineering. However, policy makers and nuclear advocates alike need to accept that the risk of meltdowns is very low, but not zero.

If we replace the LNT with a model that appropriately contextualizes the costs of low-dose ionizing radiation, we can accept that there will be inadvertent low-dose exposures. Mitigating exposure to more significant amounts of radioactive material is much easier than mitigating any and all exposure. An emphasis on avoiding all exposures has resulted in unnecessary complexity in nuclear power plant designs and associated protocols. If we abandon the LNT, we can pursue more robust and elegant designs which drastically reduce the probability of any civilians having an adverse health reaction due to a meltdown or other accidental exposure.

There are several dynamics of radioactive material to consider when discussing safety. The first is dose dependency. According to empirical data and the practice of radiology, it is much safer to have daily exposures of a lower dose of radiation than to experience a larger dose all at once. This is fundamental to radiation therapy and is called fractionation.

Under this principle and a nonlinear no threshold model, the dilution of radioactive material helps to mitigate risk by decreasing the dose that any single individual is exposed to over a given timeframe. We do not want radioactive material to be released but in the event that it is, it is less of a threat if it is diluted.

The second dynamic relates to the half-lives of the isotopes themselves. The half-life is a quality of an isotope which refers to the time period when half of the radioactive material will have decayed. The decay occurs spontaneously and when the material decays it releases ionizing radiation that can harm humans. Isotopes with shorter half lives are generally more dangerous because the decay is happening at a greater frequency, which may lead to an empirically significant exposure to ionizing radiation.

However, due to their shorter half-lives, these elements cease to be dangerous over a shorter duration of time. Radioactive materials that persist for longer durations are generally the ones which are easier to manage and less of a threat to humans and other biological life forms. This has implications for managing nuclear waste.

Handling nuclear waste responsibly

The problem of nuclear waste is, at its core, an engineering problem. The Connecticut Yankee nuclear plant operated from 1968 to 1996. During this time, it produced 110,000,000 MWh of electricity. This resulted in the production of 1,020 tons of extremely dense used nuclear fuel. This used fuel is currently stored in 43 secure casks in an area that is 70 feet by 228 feet.

Alpha radiation particles are heavy, and cannot pass through a piece of paper or even dead skin. Unless a human ingests an isotope that is emitting alpha particles, they do not pose a threat to anyone.

Indeed, all of the nuclear waste generated in the U.S. since 1960 fits in an area the size of a football field stacked 30 feet high, and could be stored in casks engineered to withstand a missile strike (meant to emulate a plane crash). Most of the waste is uranium-238, which emits alpha particles. Alpha radiation particles are heavy, and cannot pass through a piece of paper or even dead skin. Unless a human ingests an isotope that is emitting alpha particles, they do not pose a threat to anyone. When critics of nuclear power say that the waste will be with us for billions of years, they are mostly referring to uranium-238 and its half life of 4.5 billion years. It is important to remember that the uranium ore before it was mined was likewise emitting alpha particles and it will be slowly and safely emitting alpha particles for billions of years.

The fission products provide genuine risks to humans and other creatures. They make up three percent of the total used fuel. Each of these products has its own risk profile. When stored within one of the dry casks, they are not a threat to human or animal life. Relative to the benefits provided by having abundant, reliable energy, and reducing our carbon footprint, the costs of the dry casks are negligible. Additionally, this waste might become a scarce resource. These materials might have applications in future reactor designs or other technologies, notably medical technologies. There are experimental breeder reactor designs that use nuclear waste as fuel to produce more power.

The first civilian nuclear power plant in the U.S. began operations less than a century ago. It is reasonable to believe that our understanding of the potentials of these isotopes may rapidly change over a short time. There are proposals to store these materials in secure geological formations for millions of years to come, but retrieving them after the fact would then be uneconomical. Whereas the cost of locally and safely storing these materials in dry casks is trivial and this solution mitigates the risk of any missed future opportunities.

Policymakers do not need to commit to a decision about long-term storage at this time. There is room for debate on this topic, but the reality is that we know that many of these fission products have become extremely valuable as research and technology has progressed. The short-term risks of storing this material in missile-resistant dry casks are insignificant and need to be compared to the risks associated with the waste from coal plants, additional carbon emissions, greater poverty, and grid instability. The U.S. should follow the examples of Canada, and France to reap the benefits of nuclear power.

Nuclear power has made France Europe’s biggest power exporter

In 1974, the same year that the U.S. decided to effectively cease building new nuclear plants, French Prime Minister Pierre Messmer announced a plan to rapidly expand France’s nuclear power program. At the time, most of France’s electricity was produced by oil-fired plants from imported oil. The ‘Messmer Plan’ was intended to reduce France’s dependence on volatile global oil markets, in part because the country lacks good domestic sources of coal or oil.

At the time, France had eleven operational nuclear reactors which produced eight percent of the country’s electricity. The Messmer plan was enacted without any public debate. The decisions were made solely by the government, the Commisariat à l’Energie Atomique (CEA), and France’s then public utility, Electricité de France (EDF) — which is still overwhelmingly owned by the French government. The EDF was authorized to build and operate 80 nuclear power plants, which would have produced 38GW of power and supplied 50 percent of France’s electricity demand in 1985. The initial plan had been to build 80 nuclear plants by 1985 and 170 by 2000. Between 1971 and 1991, France built 59 new reactors. Lower growth in demand for electricity than expected made the full Messmer plan uneconomical.

France’s energy sector is heavily centralized and technocratic. The leaders of the CEA are all physicists or engineers, and their roles are only open to top graduates of France’s nuclear programs. They are not politically appointed. To some degree, this insulates the nuclear program from electoral concerns. There are currently 56 operational reactors, 1 under construction, and 14 have been shut down. Support for nuclear power is not a particularly contentious issue in France, although it does have its detractors.

In 2021, France was the biggest net exporter of power in Europe. Since its electricity is generated by nuclear power, France’s energy is cheaper for countries with carbon taxes to import. In the second half of 2021, French household consumers paid $.202 euros/kWh for electricity, 14.7 percent less than the EU average and 37.5 percent less than German household consumers. Messmer, the CEA, and the EDF’s decision to invest in its civilian nuclear power program has enabled the country to maintain a reliable grid, provide comparatively cheap household electricity for French citizens, and build a grid set to become independent from fossil fuels, particularly coal.

Nuclear power generates the majority of electricity in Ontario

Nuclear power plants produce 15 percent of Canada’s electricity. Canada’s first full-scale commercial reactor began operations in 1968. There are currently 19 operational reactors and six have been shut down. From 1971 to 1986, Canada built 18 nuclear reactors. All but one of Canada’s operational nuclear power plants are located in Ontario.

In 2021, Ontario’s nuclear power plants generated 58.2 percent of the province’s electricity, a total of 83 terawatt hours. All of the active reactors are Canada Deuterium Uranium (CANDU) pressurized heavy-water reactors. CANDU reactors can run on unenriched natural uranium because of their use of heavy water which absorbs fewer neutrons.

These reactors are advantageous because they remove the necessity of enrichment facilities, reducing costs and potential misuse for weapons manufacturing. These reactors have been exported abroad to multiple countries The last new plant to begin construction was Darlington 4 in 1985. It began operations in June of 1993.

The price of electricity in Canada is cheaper than that in the U.S. In September 2021, the average Canadian household paid $0.117 per kWh. In comparison, American household consumers paid $0.159 per kWh. In Ontario, Bruce Power’s nuclear plants produce electricity for $0.0785 per kWh. The only producer that can compete with them is Ontario’s hydropower which produces electricity for $0.058 per kWh. Ontario’s nuclear power program is particularly noteworthy because it has enabled them to make a clean transition away from coal while maintaining grid reliability. The last coal plant in Ontario closed in 2014.

Can the U.S. learn from Ontario and France?

The U.S., Canada, and France all have different systems of government, histories, geographies, regulations, and natural resources. However, nuclear power is critical to the energy mix of all three countries to varying degrees. A comparative analysis should be useful as policy makers consider the future of nuclear power and the United States’ clean energy transition.

For the United States’ clean energy transition to be successful, it needs to deliver energy which is: affordable, reliable, and low carbon. To do that, policymakers will need to support a policy agenda which will enable the U.S. to phase out its use of coal plants, while expanding the energy supply. France and Canada, particularly in Ontario, both have thus far been incredibly successful in making transitions that rapidly decreased coal’s share of the electricity mix while preserving reliability.

While the U.S. has been successfully reducing coal’s share of the energy mix, the transition has thus far come with shocks to reliability and affordability. New England has been burning fuel oil to get through the winter. Texans spent days without electricity in the winter of 2021. In California and throughout the Midwest, electricity prices have been surging and there are growing concerns about the potential for rolling blackouts moving forward.

France generates the greatest percentage of its electricity from nuclear power of any country with a GDP greater than one trillion dollars and is set to shutter its remaining coal plants within the next year. In 2020, 89 percent of all of France’s electricity was generated through hydro, wind, solar, and nuclear power. Less than one percent of France’s electricity was generated by coal fired plants and only 6.7 percent of their electricity was generated through natural gas. France’s example demonstrates that nuclear power can successfully displace coal plants while maintaining affordability and reliability. The percentage of electricity generated by coal in France peaked in 1979 at 29.7 percent. As France’s new nuclear plants came online, coal was successfully displaced. The percentage of France’s electricity produced by nuclear power peaked at 79 percent in 2006. However, nuclear power plants still generate roughly 70 percent of France’s electricity.

France does have its differences with the U.S. It is a much smaller country. It does not have bountiful resources of coal, oil, and natural gas. France’s energy market is functionally nationalized, as the government owns 84 percent of EDF’s shares. Likewise, the U.S. does not have a relatively insulated technocracy which could unilaterally decide to lay the foundation for a nuclear future. France’s expansive nuclear build out has its own lock in. It would be difficult to transition off of nuclear power.

The U.S. has a diversity of market structures, a patchwork of relevant federal, state and local regulations, and its own set of market incentives. The success of the French, however, still stands as an example for the U.S. in certain respects. France demonstrates that nuclear power can reliably and affordably provide the bulk of a nation’s energy. While France does not have cheaper household electricity than the U.S., it has cheaper electricity than many European countries while also producing fewer carbon emissions. France’s nuclear power program has, overall, been a success.

Canada, more broadly, is in some respects a better comparator to the U.S. than France. Canada has an abundance of natural resources, does not have a French-style technocracy, and spans a comparably large geographical area. Canada has cheaper household electricity prices than the U.S. and has significantly reduced emissions from greenhouse gases over the past two decades. The province of Ontario serves as a particularly interesting comparator. In 2020, Ontario produced 91 percent of its electricity through hydro, wind, solar, and nuclear power. Only 5.7 percent of Ontario’s electricity was generated through natural gas.

Ontario’s transition away from coal and other hydrocarbons has been notable. From 2005 to 2015, the percentage of Ontario’s electricity generated from coal power plants went from 18.54 percent to 0 percent. From 2005 to 2020, renewables went from making up less than one percent of Ontario’s electricity generation to 8.56 percent. However, unlike in the U.S., Ontario did not see a sharp increase in natural gas during this time period. Most of the gap created by the shuttering of their coal plants was replaced by increased output from wind, hydroelectric, and nuclear power.

Canada’s household electricity prices are lower than America’s. In this figure, Canada is represented by the orange bar, the U.S. in dark blue, and France in light blue. (Graphic: G. Dever / FREOPP)

FREOPP scholars have constructed a set of international indices that compare the U.S. to 31 other high-income countries with a population greater than five million. For this set of countries, France ranks 16th out of 31 for the lowest household electricity prices. However, it ranks fifth-best in terms of lowest carbon dioxide emissions per capita. By contrast, the U.S. ranks ninth-best for household electricity prices, but 29th for carbon dioxide emissions per capita, only beating out Australia, Saudi Arabia, and the United Arab Emirates. Canada leads the set by ranking fifth in terms of electricity prices, but only has marginally lower carbon dioxide emissions per capita than does the United States. If Ontario were compared to these countries, it would likely rank closer to number three or four in terms of electricity prices and towards the middle of the pack in carbon dioxide emissions per capita.

France’s carbon dioxide emissions per capita are far lower than North America’s. In this figure, Canada is represented by the orange bar, the United States in dark blue, and France in light blue. (Graphic: G. Dever / FREOPP)

Nuclear capacities in the U.S., France, and Canada have been relatively stagnant since the 1990s. France’s newest reactor went online in 2002. Canada’s most recent new reactor went online in June 1993. The United States’ newest reactor, Watts Bar Unit 2, was completed in 2015, but construction of the plant had begun in 1973. Nuclear power hit its peak percentage of the U.S. electricity production mix in 2001. Demand had been relatively stagnant until recently. With demands for greater electrification, new clean power generation will need to be built.

What if America’s nuclear regulation had been like France’s?

The U.S. has maintained its dependency on coal power plants much longer than either France or Ontario. It is easy to assume that this is due to the United States’ abundance of coal, but American coal will be abundant long after the last coal plants are shut down. If the U.S. had nuclear regulations and a reactor approval process which was more amenable to market competition and innovation over the last 50 years, the U.S. would have transitioned away from coal power plants toward nuclear’s affordable and reliable base load energy.

What if the United States’ shift away from coal had been more comparable to France?

The percentage of France’s electricity generation from coal peaked in 1979 at 29.8 percent. The percentage of the United States’ electricity generation from coal peaked almost a decade later at 57.7 percent. More than 40 years later, France is set to shutter its last remaining coal power plants, while coal is still used to generate around 20 percent of all electricity in the U.S.

Let’s suppose that coal’s share of the United States’ electricity generation had likewise peaked in 1979 — at 46.7 percent — and its share of electricity generation changed at the same rates as France’s did from 1979 to 2020. Furthermore, let us assume for simplicity that 100 percent of the electricity that was no longer generated by coal plants was instead generated by nuclear power plants. If we adjust the portion of the electricity produced by coal in the U.S. over the time period, in 2020 coal would have produced 18.5 percent of the total electricity, compared to 19.3 percent in reality. This is not a very significant of a shift. However, the path that we took to reach this point involved significantly fewer terawatt-hours of electricity being produced by coal power plants.

In this hypothetical, 35,605 terawatt hours of electricity were generated by American coal plants from 1979 to 2020, instead of the 66,359 terawatt hours that were produced by American coal plants in reality. According to the IPCC, the average life cycle carbon dioxide per kilowatt hour is 820 grams for coal and 12 grams for nuclear power. If the U.S. had taken France’s path, generating the equivalent amount of electricity would have produced 29.6 gigatons of carbon dioxide over the 1979–2020 period instead of the 54 gigatons of carbon dioxide that were produced by coal in actuality: a reduction in carbon emissions of 45 percent. For context, the U.S. generated 4.6 gigatons of carbon dioxide in 2020 alone.

As acknowledged earlier, the U.S. and France have their fair share of differences. However, the success of France and Ontario’s nuclear programs lend credibility to the possibility that this scenario could have been realized. Nuclear power successfully competes in various localities throughout the U.S. and in other countries. The overall percentage of our electricity produced by coal in this hypothetical is not significantly different from our present situation. Many of the most significant reductions in emissions would have occurred during the 1980s and 90s.

The point of this section is not to lament what might have been. Instead, it is meant to provide perspective as we accept the risks posed by carbon dioxide emissions and diligently work to make a clean energy transition that will provide reliable and affordable energy. Nuclear power can compete with other sources of energy, if it is allowed to by regulators. There has been a clear cost to the American approach, relative to that of France or Ontario.

It is complicated to assess the counterfactual of how a French- or Ontario-style regulatory framework would have affected U.S. household electricity prices over the past 50 years. Energy markets throughout the U.S. are diverse in both their energy mix and structure.

If the U.S. had doubled down on nuclear power in the late 1970s, the world would have been a different place. Rather than focus on the distant past, the case that a greater supply of competitive nuclear power plants can focus on our recent past, our present, and the future.

Toward a new, pro-nuclear regulatory framework in the U.S.

The transition to clean nuclear energy is best accomplished by regulatory reforms that reduce barriers to entry for entrepreneurs, investors, and nuclear power generators. While Congress and President Biden have worked to increase federal subsidies for nuclear power, it is far more important to reform the Nuclear Regulatory Commission so as to reduce the costs and risks of building new nuclear generation capacity.

- Redesign the nuclear licensing process to be less prescriptive, and establish a program which will permit advanced nuclear reactors to be prototyped and tested before licensing. Advanced nuclear reactors are distinct from earlier models and inherently experimental. Advance reactor prototypes should be subject to rigorous stress testing, data from which can be used by the NRC for licensure. The NRC should use objective and clearly defined performance requirements for licensure. Ideally, Congress should authorize a new nuclear regulator, the Nuclear Innovation Agency, with exclusive jurisdiction over next-generation technologies, whose budget could be linked to generation capacity under its jurisdiction (see below). This is because the NRC’s five-decade refusal to authorize new plants may make its institutional culture unable to adapt to the climate and energy needs of the 21st century.

- Congress should create a statutory mechanism whereby the Nuclear Regulatory Commission’s budget expands or contracts in proportion to the amount of power generated by nuclear plants. At present, there is a misalignment between the incentives of the NRC and the public’s need for reliable, affordable, low-carbon energy. The NRC should not be partially funded by license application fees, incentivizing a drawn-out application process. Instead Congress should fund the NRC (and/or its proposed successor, the Nuclear Innovation Agency) through an appropriation tied to the number of kilowatt-hours produced by nuclear power plants, as measured by the U.S. Department of Energy. In this way, the NRC is incentivized to ensure that nuclear energy is both safe and productive (unsafe plants that close down would detract from a budget is tied to active nuclear energy production, as opposed to nuclear generation capacity).

- Abandon the policy of reducing radiation to levels ‘As Low As Reasonably Achievable.’ This policy—which seeks to all-but-eliminate radiation exposure—is untenable for a competitive and innovative civilian nuclear power industry. The Nuclear Regulatory Commission should instead define clear protocols for nuclear plants to abide by in the event of the release of varying levels of ionizing radiation. Failure to abide by the protocols should come with clearly defined penalties that incentivize compliance and operational excellence.

- Replace the Linear No Threshold model of toxicity from ionizing radiation with a model which more accurately reflects scientific data. The model most supported by the evidence is a nonlinear model which does not recognize a specific threshold. Rather than define a threshold, the NRC should outline various required responses to varying levels of radiation emissions. This policy change should extend to all relevant federal government agencies.

- Proactively communicate the shift away from the Linear No Threshold model and ALARA and the reasoning behind them. These changes reflect decades of research and diligent study by leading researchers, practitioners, and experts. They can and should be transparently and confidently shared with the public.

- Appropriate federal lands for pre-license prototypes. While the land should be appropriated by the federal government, the companies should pay to lease access to the land. Additional funding should be allocated to provide necessary infrastructure to conduct rigorous testing while maintaining safety. Require all prototype reactors to be insured by third parties and post a security deposit sufficient to cover worst-case damages to the land. The intention behind these policies is to reduce administrative burdens pre-prototype and preserve the test sites. Only credible projects will be able to secure funding and insurance to meet this threshold, reducing the necessity of costly pre-screening.

- Incentivize localities to store nuclear waste locally. Amend the Nuclear Waste Act of 1982 to allow a portion of tax revenues to be rebated back to communities which permit waste to be stored in their locality, or contracts with another locality to store the waste, in compliance with all necessary guidelines. The emphasis on committing to long-term storage in the initial legislation no longer reflects the present intentions of the U.S. government.

- Continue to store nuclear waste locally or in a centralized location in dry casks. These storage methods are low-risk and the best solution at present. The volume of waste generated does not present any real challenges. Moving the dry casks to a central location is a tractable engineering problem but not a short-term necessity, other than for political reasons. The emphasis on the need to move the nuclear waste to a different location or permanently dispose of it sends the wrong signal. The risks of nuclear waste need to be compared to the costs that would be incurred if nuclear power plants were no longer permitted to operate. We do not need to make any decisions about long-term storage at present and a performative urgency exacerbates concerns around radiation. Fission products may have applications in next generation reactors and new technologies. If humanity discovers that these scarce resources have valuable applications in the future, the real costs of long-term storage solutions, which make retrieval expensive, will be even greater than the worst case estimates.

Nuclear energy would lower electricity prices for Americans

It is essential to the future prosperity of the U.S. that we deliver more electricity at an affordable price. Only in this way will we meet the growing demand for electricity: from population growth, economic growth, and from the transition to electric vehicles that is already underway. The U.S. Energy Information Administration projects that U.S. electricity demand will increase by 913 billion kilowatt-hours over the next three decades.

To put that number in perspective, in 2021, U.S. nuclear power plants generated 778 billion kilowatt-hours of electricity. In other words, doubling the amount of nuclear power generation in the U.S. would not be enough to meet future demand.

Localities throughout the U.S. have struggled to meet growing demand for air conditioning in the summer and heating in the winter. New nuclear reactors would help to meet that demand. Under a pro-nuclear regulatory framework, newly approved plants will have the ability to deliver energy to American households at a competitive price. New nuclear reactors will improve the reliability of the U.S. electric grid, a prerequisite for affordability.

As discussed above, battery storage costs increase as a greater proportion of a grid’s electricity comes from unreliable, intermittent sources like wind and solar power, because intermittent electricity increases the need for often-idle battery storage. The price of electricity will need to rise to pay for that excess storage capacity, or communities will be left vulnerable to rolling blackouts.

Either way, the costs of this unreliability will be devastating. The fragility of grids throughout the U.S. has already imposed significant costs on Americans. During Texas’ winter storm Uri in 2021, electricity prices surged to prevent a total grid failure. This surge pricing led to ratepayers owing $10.3 billion dollars, more than Texans paid for electricity throughout the entirety of 2020. The total costs in human life and damages are even greater. Nuclear’s clean firm power helps to reduce the total volatility in energy supply on the grid, allowing grid operators to more readily adapt to variations in electricity demand.

The successes of France and Ontario’s nuclear programs demonstrate that a nuclear-powered clean transition is possible. We can make a clean transition which improves health outcomes, maintains reliability, provides affordable electricity, and offers relative protection from the shocks of global energy markets. The governments of France and Ontario had a vision of a future less dependent on coal and created a path which was appropriate for their respective jurisdictions.

If the U.S. government is determined to meet its climate goals and to deliver a future with energy abundance, there is a path forward which is appropriate for America’s unique political environment and culture. We do not need a system where a technocracy is given unilateral power or loan guarantees for poorly structured projects that drag on at multiples of their initial bid.

A U.S. nuclear renaissance instead just needs a regulatory framework which reflects the empirical data on the risks of ionizing radiation, our demonstrated commitment to nuclear power, and our belief in the power of innovation and competitive markets. Over the course of the next decade, we can expand the supply of energy, decrease per capita emissions, and ensure that electricity stays affordable, reliable, and abundant.

A new American golden age of clean nuclear power

The U.S. has the opportunity to usher in a new golden age of nuclear power. In order to meet our climate commitments while maintaining access to affordable and reliable energy, reform to the United States’ nuclear regulations is necessary. The ratchet of regulation and the adoption of ALARA and the LNT have caused the United States’ nuclear industry to stagnate. Before the adoption of these policies, the American nuclear industry had proven that, at the most fundamental level, nuclear power is a safe and cost effective method of energy production.

Since the 1970s, concerns over low doses of ionizing radiation, comparable to background radiation in localities throughout the world, have drained the American nuclear industry of competition and innovation. The unseen costs are all around us in the form of higher energy prices, greater emissions and pollution, and an increasingly fragile electrical grid.

The successes of Ontario and France’s respective nuclear programs demonstrate the viability of nuclear power as a primary source of the United States’ electricity mix. By reforming our current regulatory processes, we can open the United States’ nuclear industry to greater competition and innovation, mitigating the harms caused by carbon emissions. The aforementioned suggested changes better reflect Congress’ support for our existing nuclear reactors and the next generation of advanced nuclear reactors. Nuclear power is not inherently uncompetitive or costly, as demonstrated by the performance of nuclear power plants in France, Canada, and the United States. Russia, China, and other countries throughout the world are going to compete to design, build, and export advanced nuclear reactors. The U.S. should take a leadership role in the nuclear industry and confidently share the conclusions of the leading experts on ionizing radiation.

If we do not recognize nuclear power’s role in the clean energy transition, there is the potential of significant unnecessary harm. The clean energy transition can fail in at least two ways. First, it fails if we maintain the status quo and don’t take action to move to lower carbon emissions sources. Second, it will fail if we do not create a regulatory environment which allows for sufficient human ingenuity. We cannot naively move down a path towards net-zero emissions that disregards reliability and affordability. That would be a path back to burning coal and wood.

A government unable to maintain reliable power is one that will find itself without legitimacy and vulnerable to anyone committed to keeping the lights on. Nuclear power has and will continue to be vital to a safe, affordable, and reliable transition to a lower emissions future and the U.S. should lead the way.

">

">